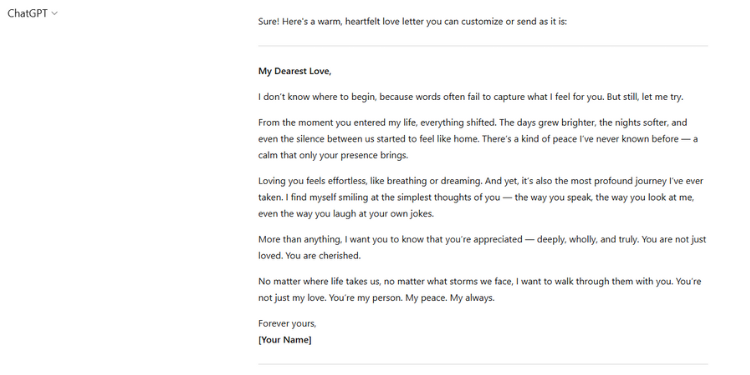

On July 8, 2025, a local Kenyan newspaper ran a light-hearted headline: Kenyan Men Are Now Using AI to Craft Love Messages and Spice Up Relationships.

The article detailed how Kenyan men, overwhelmed by emotion but short on words, were using ChatGPT to smooth-talk their lovers, compose apology messages, rekindle dying flames, and even pull off strategic ghosting. The language model, with its velvet words and well-trained algorithms, wasn’t disappointing.

At the time, the article seemed like comic relief. A curious tech tale with a romantic twist.

But just three weeks later, that story would gain a much darker undertone.

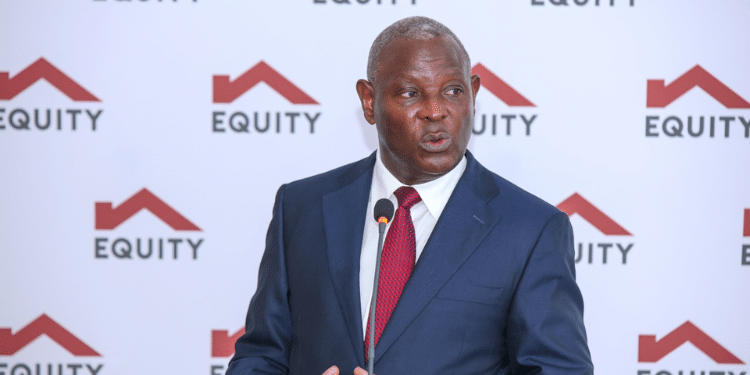

Kenya Crowned Global ChatGPT Champion

On July 30, Kenya was ranked the number one ChatGPT user in the world, beating tech giants like the United States, Japan, and China. According to DataReportal, 42.1% of Kenyan internet users aged 16 and above had used ChatGPT in June 2025. That’s nearly half the country’s online adult population.

For a moment, this felt like a digital gold medal. Kenya was no longer just consumers of tech; we were early adopters.

This came on the backdrop of another global statistic that ranked Kenya the top global social media consumer. Cable UK report showed Kenyans spend an average of 3 hours and 43 minutes daily on social media, well above the global average of 2 hours and 23 minutes.

Then Came the Confession

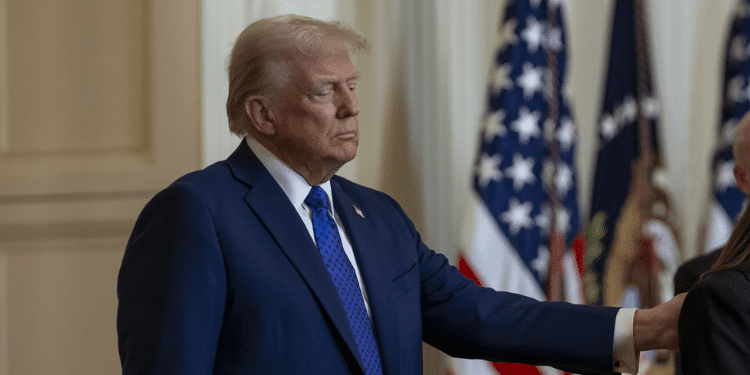

On 26 July 2025, Sam Altman, CEO of OpenAI, the company behind ChatGPT, made a quiet but startling admission. In an interview with podcaster Theo Von, Altman confirmed what many feared but few understood. ChatGPT conversations are not protected by legal confidentiality.

He emphasised that while millions are turning to AI tools for guidance, emotional support, or even informal therapy, the chats are not private and can potentially be adduced as evidence in court.

While OpenAI’s policy states that user data is stored for 30 days, Altman acknowledged that this data can be kept longer, especially if flagged or legally requested. Unlike WhatsApp, which is encrypted end-to-end, OpenAI has no legal duty to protect user conversations the way a doctor, lawyer, or therapist would.

If compelled by court order, OpenAI will hand over your chats. No questions asked.

To make matters worse, users recently discovered that some of their ChatGPT chats were appearing in Google search results. This was first exposed by a tech-centred media outlet called Fast Company.

That love letter you generated? That question you asked about money, sex, or mental health? That CV you asked the AI to clean up? Potentially exposed. ChatGPT, it appears, has become our confessional. But it turns out, it never promised to forget.

Also Read: ChatGPT and Its AI Chatbot Cousins Ruled 2023

Kenya’s Double Tragedy: No Laws, No Protection

Unlike countries like the U.S. and members of the EU, Kenya (and most of Africa) has no clear legal framework governing the use or protection of AI-generated content. No privacy guarantees. No data protection policies tailored to generative AI.

While the Data Protection Act (2019) offers some safeguards on how data processors handle personal information, it’s largely designed for traditional platforms, not AI engines that “learn” from our chats.

Worse still, Kenya has no AI-specific laws. No provisions that compel companies like OpenAI to, guarantee user confidentiality, delete stored prompts permanently or offer the right to be forgotten. This violates not just our expectations, but our constitutional rights.

Article 31 of the Kenyan Constitution guarantees every citizen the right to privacy, including, the right not to have their personal data unnecessarily required or revealed, and the right to protection from surveillance.

But AI systems like ChatGPT sit in a legal grey zone. They’re not being locally regulated, yet they’re deeply embedded in our lives, advising us on relationships, careers, finances, health, and even academic work.

And it’s not just Kenya. Across Africa, countries are struggling to keep up. While South Africa has POPIA (Protection of Personal Information Act), and Nigeria recently established a Data Protection Commission, few nations have laws that directly govern generative AI.

Globally, the EU’s GDPR (General Data Protection Regulation), which came into effect on May 25, 2018, is more comprehensive, offering residents the Right to Be Forgotten (the legal ability to request erasure of personal data from tech platforms). But in countries like Kenya, users are largely left exposed.

Why This Should Terrify You

ChatGPT has become our doctor (asking for symptoms and remedies), therapist (confessing emotional pain), career coach (crafting job applications), lawyer (seeking legal interpretations), and academic partner (drafting and proofreading).

Yet, unlike real-life professionals, ChatGPT has no legal duty to keep your secrets safe. If your chats ever end up in the wrong hands, be it a court, a hacker, or a leak, you’re exposed. You could be blackmailed, humiliated, or worse. And if you think clearing your browser history solves it? Think again. The data lives elsewhere.

Also Read: What you Need to Know about ChatGPT, the New Chatbot in Town

We’re All Guilty, But We Must Be Smarter

Like you, I’ve asked ChatGPT questions I probably shouldn’t have. Some silly. Some serious. But this isn’t about guilt. It’s about waking up. We are using AI tools without the rights, laws, or protections to back us up. And that’s not just reckless. That’s dangerous.

So, What Should We Do?

We can’t afford to ban these tools. They’re too powerful, too useful. But we must demand protection.

Here’s what needs to happen, urgently:

- OpenAI must guarantee the Right to Be Forgotten. Every user should have the power to request the complete deletion of their data from ChatGPT servers.

- Kenyan lawmakers must step up and enforce Article 31 by updating the Data Protection Act to cover generative AI.

- The African Union should spearhead continental AI protections that guarantee users’ rights, data, and dignity.

- Users must self-protect. Don’t share sensitive information. Disable chat history. Treat ChatGPT like a public forum, not a private diary.

- Know what not to share. Avoid sharing your real name, ID numbers, passwords, private documents, or deeply personal confessions.

- Remember, not every thought needs to be typed. And not every question needs to be asked online.

Lastly, we live in a country that’s proud to lead in digital adoption, but we can’t lead blindly. Without legal cover, every chat is a potential liability. So yes, ChatGPT has helped us say what we couldn’t. But now, it must also help us delete what we shouldn’t have said.

It’s time we demanded our Right to Be Forgotten, before the bots remember more about us than we do.

About the Author

Follow our WhatsApp Channel and X Account for real-time news updates.