The Court of Appeal has ruled that Facebook’s parent company Meta can be sued for dismissing dozens of moderators in Kenya.

While delivering the verdict on Friday, the Court of Appeal upheld the 2023 court ruling, which decided that Meta could face trial over the moderators’ dismissals.

“The upshot of our above findings is that the appellants’ (Meta’s) appeals are devoid of merit and both appeals are hereby dismissed with costs to the respondents,” the three judges ruled on Friday.

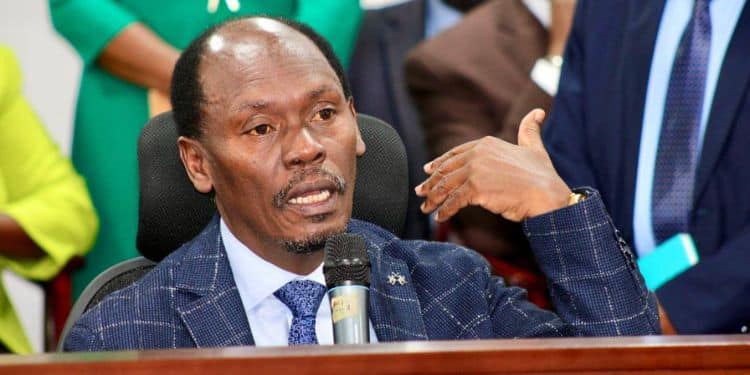

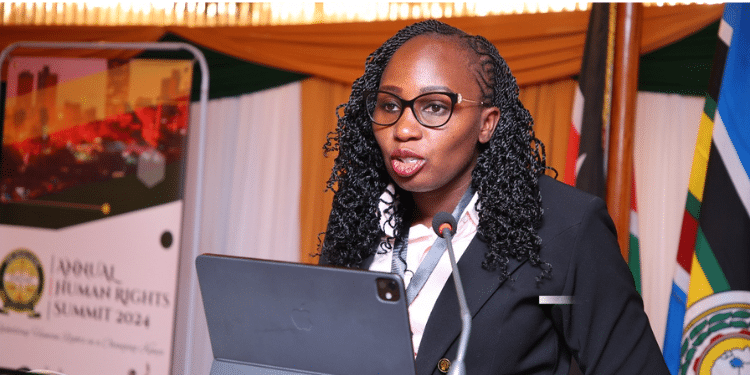

The moderators’ lawyer Mercy Mutemi said that the case will proceed in the labor court, adding that they are seeking US$1.6 billion in compensation, which translates to around Ksh 205.6 billion.

In 2023, 185 content moderators sued Meta and two contractors after losing their jobs with a Kenyan company called Sama for trying to unionize. Sama was contracted to moderate Facebook content.

The moderators are from different African countries and were based in Nairobi before their dismissal.

In the 2023 suit, they claimed that after their termination at Sama, they were blacklisted from applying for the same roles at another firm called Majorel. This was after Facebook changed contractors. However, Meta appealed this suit.

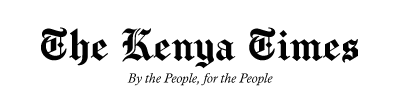

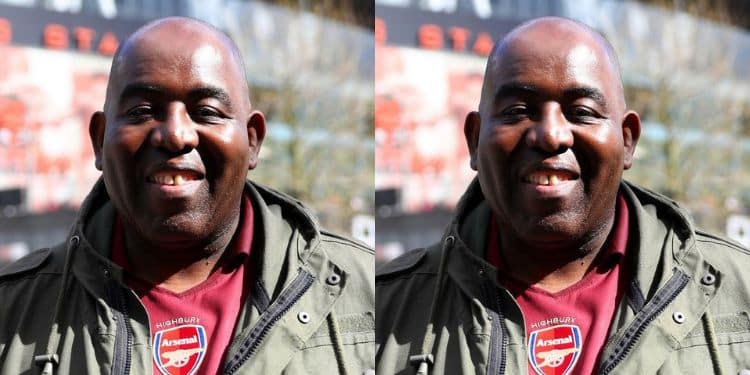

One of Facebook’s former moderators involved in the suit James Irungu said the ruling was “a significant victory.”

On the other hand, Sama and Meta have defended their employment practices in Kenya. Meta had earlier argued that the Employment Court did not have jurisdiction over them.

Also Read: Facebook Rolls Out 2 New Monetization Features for Kenyan Creators; How They Will Work

Another Suit against Meta

Meta has also been sued before by Daniel Motaung over claims of poor working conditions and in February 2023 a court ruled the company could be sued over the raised concerns. Meta appealed this case. Motaung alleged that the company exploited him and his fellow employees and affected their mental health

Nonetheless, out of court settlement talks between the Kenyan moderators and Meta failed in October 2023. It remains to be seen how the suit will proceed and whether the moderators will get the compensation they are seeking.

Also Read: Meta to Launch Fact-Checking Feature for WhatsApp Users

Facebook moderators filter out violent and graphic content to ensure the platform’s users are protected from harmful, distressing content.

One Facebook moderator in 2023 revealed to the BBC the emotional toll content moderation has on those who do the job. He disclosed how he had to filter content of things like beheadings to ensure it does not get to the end users of the social media platform.

Follow our WhatsApp Channel for real-time news updates

https://whatsapp.com/channel/0029VaB3k54HltYFiQ1f2i2C